Transcribing recordings is the bane of journalists, meeting note-takers and students everywhere. Enter Otter.ai, a speech-to-text transcription tool that works online or via a phone app using artificial intelligence (AI) and machine learning (ML) to carry out transcription, live or by uploading a speech file after the event.

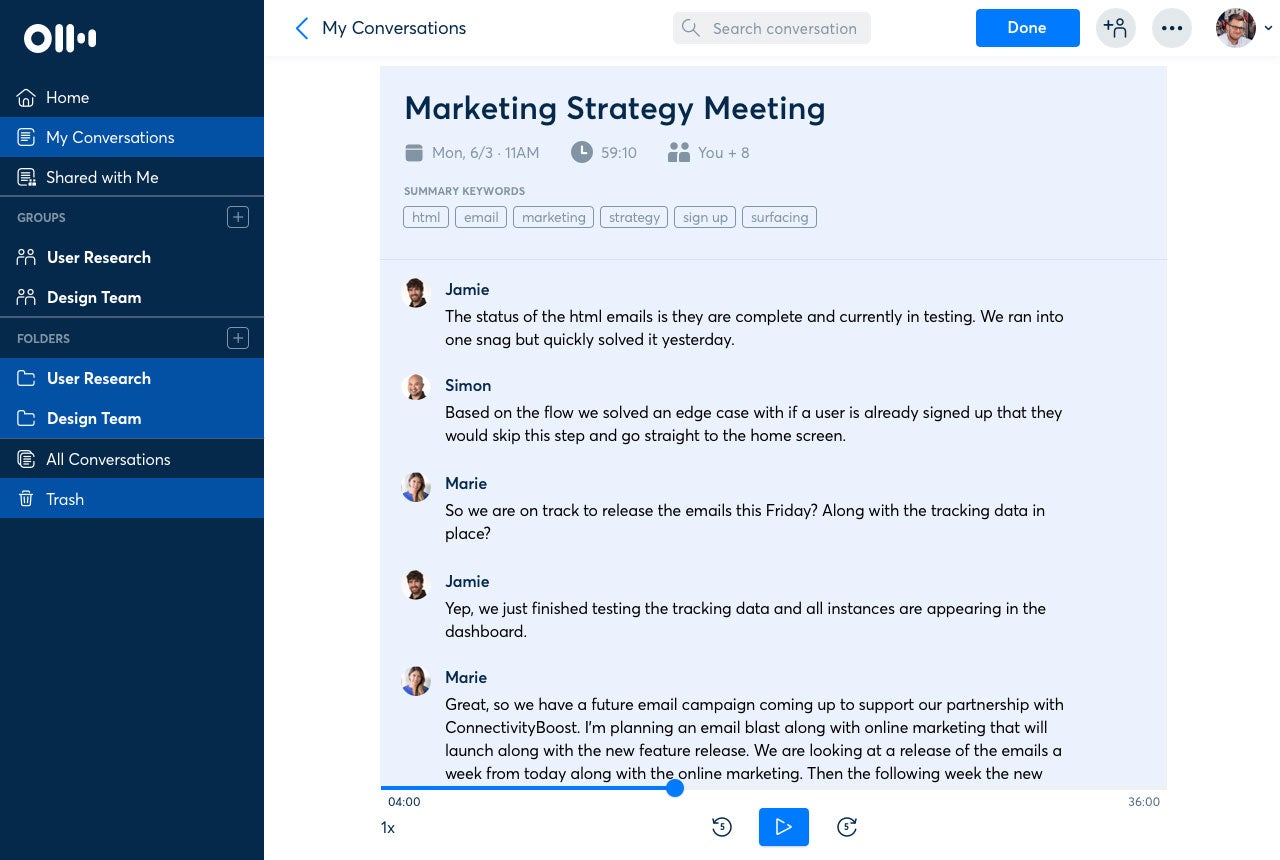

Otter Live Notes for Zoom has proven particular popular during lockdown, enabling meeting participants to open a live transcript directly from Zoom then view, highlight, comment and add photos to create meeting notes collaboratively.

Otter.ai VP of Product Simon Lau talked to Verdict about the product, shared his top tips for users and revealed how the company got its name.

Berenice Baker: Are you finding a lot more customer engagement during lockdown now people are on Zoom all the time?

Simon Lau: The pandemic has forced everybody to adopt technology much quicker, so it’s very timely that we provided our integration with Zoom. Whether it is for deaf or hard-of-hearing students, accessibility for any participants that have accommodation needs, students who want to take notes, for meetings or interviews where you don’t have to worry about taking a full transcription of the entire meeting, Otter is right there.

If you hear any anything interesting, you can just click on the highlight button and just to highlight that last sentence or two, so you can come back to it more easily and review it and playback just those important bits of your conversation.

The engine behind Otter is called Ambient Voice Intelligence. Could you explain how that works?

We built the speech-to-text technology that’s at the very core; turning English spoken audio or video into written English. So whether you call it speech-to-text or ASR [automated speech recognition], that’s the core functionality of our speech engine. That’s one component of our Ambient Voice Intelligence, but on top of that, we also have by speaker diarisation and speaker identification.

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalDataSpeaker diarisation is nothing more than separating speakers into one or two. So, without knowing Berenice and Simon’s voice, for example, the transcript should say speaker one, speaker two. Currently, we are able to do that after the fact, meaning that in real-time you don’t see the labels yet, at this stage. But after this recording is done, we will be able to go back and take another pass and analyse the entire recording and be able to tell between speaker one speaker two. In the speech industry, this is called diarisation (or speaker separation). And speaker identification takes it further.

In the Otter product, you can click on speaker one, and then type in Berenice, you can click on speaker two, and type in Simon, and then Otter goes back and relabels them. It will also take the opportunity to create voiceprints for you and me so that it will go back and relabel the entire conversation for this particular recording, but also it will apply to future conversations. Let’s say we have a follow up in six months or a year and we have another Zoom call again, then your voice will be recognised.

We’re also building out other types of intelligence. A starting point is just summary keywords, because who has time to review the entire 60-minute recording of a podcast? Otter provides a set of summary keywords and associated word cloud that you can click on. You can get in a quick glance the gist of the conversation, and then move on. If you want to drill down a little bit more, you can click on key terms. That will turn into meeting notes that are actionable.

We also let users highlight and comment by selecting a sentence in the conversation and then it will automatically be collected into highlight summary and comment summary. At the very top of your Otter conversation, you can click on three highlights or comments, and you’ll be able to go back and only listen and review those three highlights.

It is ambient in the sense that Otter does not talk back to you; this is not like Siri, or Google Assistant, or any of the voice assistants that you’re used to. This is not about question and answer. This is about Otter applying speech, and intelligence into your conversations silently ambiently behind the scenes to provide new value.

We’re using Otter Live Notes for Zoom. Are there plans for any more future collaborations with other communications tech providers?

We’re using Otter Live Notes for Zoom. Are there plans for any more future collaborations with other communications tech providers?

Yeah, definitely, for example, Google Meet, Microsoft Teams, WebEx, RingCentral – those are just a few that come into mind. We don’t have any specific roadmap and timeframe to share yet, but we are still a very small team so we’re laser-focused on the building out the functionality for Zoom.

Some of these products offer their own transcription software. How does Otter compete with that?

We want to provide a platform-agnostic way for our users to capture conversations and be able to review playback and collaborate and share content that they deem valuable. We want to give our users the choice because they might meet with people that use all the different platforms. You or your organisation might have a preferred platform that you tend to use for these remote and virtual meetings, but you are bound to be invited to many platforms and you still want to take notes on those. That’s why we offer a platform-agnostic way of capturing all of these important conversations and turn them into useful knowledge and useful notes.

There’s quite a big demand among your user base for Otter to learn different languages, is that something you’re looking at and if so which are you prioritising?

We certainly want to expand to other languages. It wouldn’t come as a surprise if I said Spanish, Japanese, Mandarin, Cantonese Chinese, German, Italian, French; those are the top languages that people request. We don’t have a time frame to share at this point; it is in our future plans to expand to other languages but right now there’s still more room for improvement for English, covering all the different accents. Hopefully, our laser focus on English, providing the top of the line accuracy across all types of environments, gives us the differentiation to be able to compete against all the other alternative solutions.

When novel words come into use, do you wait for the AI to pick them up, or do you prime it? For example, Covid-19, hardly anyone had said before last February.

We have figured out a way to pick up new trending words quickly; it does require human intervention, it’s not completely automatic. Even as an individual account level, you as an Otter user would be able to go into your settings and be able to add custom vocabulary.

That’s particularly useful for any organisation that use acronyms. It makes sense to provide a very simple and easy way for customers to add custom vocabularies such as acronyms such as jargon, unique spellings of people’s names; there might podcasters who would be interviewing people and, and they want to make sure that as they interview their guests their names are transcribed correctly.

Do you plan to use the data you collect for wider analysis, for example, an insight into how the English language is developing over the years?

First and foremost, we want to provide the data for our users to analyse. We won’t be able to share too far into the future what we plan to do, but the goal is that we want to provide value to our users. A business, school or organisation may get a licence of Otter because that they want to retain the knowledge of that meeting, their sales teams, their schools, all the lectures and all the educational material.

If you think along the lines of those use cases, there are specific requirements in each vertical on what type of conversation, analysis they want and they come to expect from Otter. So we’re gonna listen to those customers and build out a roadmap to satisfy those analysis requirements.

Do you have any plans for next year or so?

Nothing specific but I can speak broadly. We’re very excited to roll out new functionalities for our speech engine and for our speaker identification that will take the next leap in improvement on that.

You have a blog which shares tips with customers. What would be your top tip for our readers?

The top tip that I love to share with our users is to use Otter to record and transcribe live. That way you can take notes simply by highlighting in one tap, faster than you can type notes into document or jot things down with pen and paper.

By the time you finish the recording, Otter gives you both the detailed transcript and the summary of highlights containing just the important bits.

How did Otter get its name?

The giant otter is one of the chattiest species on earth. The adults have 22 different calls and the babies have 11 calls of their own too. But most interestingly, most of the calls suggested an action, depending on the context. Our technology is all about accurately understanding human talk, and helping turn it into action, so we thought Otter was a great name. Plus, it’s a cute animal!

Read more: CTO Talk: Q&A with Cervest’s Alex Rahin

We’re using Otter Live Notes for Zoom. Are there plans for any more future collaborations with other communications tech providers?

We’re using Otter Live Notes for Zoom. Are there plans for any more future collaborations with other communications tech providers?