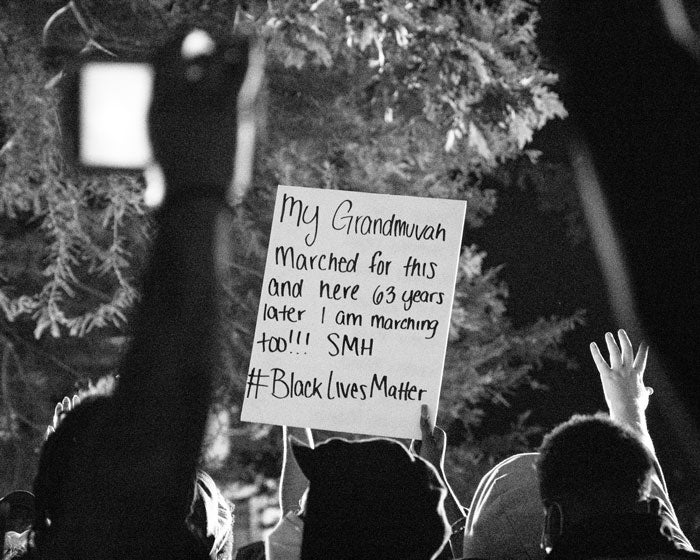

The protests that have sparked across the US and around the world following the killing of George Floyd by a Minneapolis police officer have drawn sharp attention to the systematic racism embedded in Western society, and in particular, law enforcement. But one of the technologies being used by police amid the protests is set to exacerbate the already severe inequalities in the criminal justice system: facial recognition.

Exact numbers on which facial recognition technologies are deployed by which police forces remains unclear; many police forces do not disclose this information, and the major providers to police forces do not disclose the names of their clients. However, it is clear that the technology is extremely widespread, with major providers including Amazon, through its Rekognition software, and Clearview AI.

A 2016 investigation by the Center on Privacy & Technology at Georgetown Law found that one in four police forces were using facial recognition, and that half of adults in the US were in a police facial recognition database, but those numbers are now likely to be considerably higher.

Clearview AI’s technology was used by more than 600 law enforcement agencies in 2019, according to Recode, and it has raised considerable alarm, because it doesn’t just compare images to those held in government databases, but through social media images too. This means that – according to the company’s own marketing materials – while a facial recognition search using an FBI database will see an image compared against 411 million others, with Clearview AI this number rises to 3 billion.

This has prompted numerous police forces in the US and beyond to adopt the technology, with forces noting the speed and effectiveness of the platform in a recent New York Times article.

But there is a problem with facial recognition technology, and it’s a big one: facial recognition technology as it is currently deployed is for the most part racist.

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalDataFacial recognition as it is used today is generally racist

Despite their widespread use, the majority of commercially available facial recognition technologies are more effective matching white faces than they are for those of people of colour.

This has been repeatedly shown in a host of different studies, looking at different commercially available facial recognition products.

In December, the US’ National Institute of Standards and Technology (NIST) published an exhaustive study of 189 facial recognition algorithms made by 99 different companies, which found that the majority were consistently generating a higher rate of false positives for black and Asian people than for white people – with many producing a rate of 10 times higher, but at worst at a rate of 100.

This follows a host of other studies showing similar issues with such technologies, with issues identifying women of colour regularly being highlighted as a particular concern.

A 2019 study of five facial recognition products, including those made by Microsoft, Amazon and IBM, by MIT Media Lab’s Joy Buolamwini found that all were less effective at identifying women with dark skin than men with white skin. In the case of Amazon’s Rekognition software, while it identified light-skinned men with a 100% success rate, with dark-skinned women the error rate was 31%.

This isn’t because the underlying technology is inherently racist, but because the data it is trained on is not representative of the populations it is used on. Amazon has even stressed this fact itself, saying that when trained on appropriate datasets Rekognition is completely accurate.

However, the fact remains that facial recognition technology as it is used in the real world is frequently biased.

“We have a technology that was created and designed by one demographic that is mostly only effective on that one demographic and [technology companies] are trying to sell it and impose it on the entirety of the country,” summarised US Representative Alexandria Ocasio-Cortez in a 2019 congressional hearing.

“We have the pell-mell data sets being used as something that’s universal when that isn’t actually the case when it comes to representing the whole sepia of humanity,” added Joy Buolamwini, MIT Media Lab researcher and founder of the Algorithmic Justice League, in the same hearing.

It is important to note, however, that not all facial recognition technologies are biased against people of colour, and it is entirely possible to create such technology in a way that is unbiased – it just has to be well made. The landmark NIST study noted that a small number of algorithms that it assessed did not show bias, and these generally had a higher accuracy rate.

Others are also keen to draw attention to well-made, non-racist facial recognition technologies.

“Facial recognition is a piece of tech – very plannable, scalable, predictable. All the mainstream solutions that we have come across and analysed weren’t biased,” says Roman Grigoriev, CEO and co-founder of Splento, a provider of professional photography and videography services that includes facial recognition among its offerings.

“But as they say: garbage in, garbage out. Bias data in, biased results out. So the only way to avoid bias is to solve it at the human level, not algorithms.”

Clearview AI has also published a study of its own technology that purports to show it is 100% accurate, having been assessed using ACLU methodology. However, this has attracted criticism from the ACLU, which called the report “absurd on many levels” and accused the company of attempting to manufacture an endorsement.

False positives produce false arrests

The error rate for many facial recognition technologies for people of colour is of particular concern, because in many cases these errors are taking the form of false positives, where people of colour are being incorrectly identified as criminals or suspects in crimes.

Tests undertaken on Amazon’s Rekognition software in 2019 by the American Civil Liberties Union (ACLU) of Massachusetts using images of New England athletes misidentified 27 black athletes as suspected criminals in a database of mugshots.

“There’s a case with Mr Bah, an 18-year-old African American man who was misidentified in Apple stores as a thief, and in fact he was falsely arrested multiple times because of this kind of misidentification,” said Buolamwini.

“And then if you have a case where we’re thinking about putting facial recognition technology on police body cams in a situation where you already have racial bias, that can be used to confirm the presumption of guilt even if that hasn’t necessarily been proven, because you have these algorithms that we already have sufficient information showing fail more on communities of colour.”

“Face recognition tools have a higher chance of misidentifying a person of colour than a white person, which could lead to false arrests and detainment,” agrees Paul Bischoff, privacy advocate at Comparitech.com.

“That could exacerbate racial bias that already exists among the police.”

Facial recognition is being used police the George Floyd protests

With so many different police forces having signed up for facial recognition technologies, it is not a question of whether they are being used to police the George Floyd protests, but how.

“Facial recognition tech has improved so much over the past few years both in quality and speed, that it’s highly likely that US police forces will be using numerous different facial recognition solutions before, during and after,” says Splento’s Grigoriev.

“When you can identify known criminals from a safe distance or analyse the emotional state of crowds to understand how soon the tide may turn from a peaceful protest to a full blown riot – it will be very shortsighted of the police not to use the endless list of solutions at their disposal.”

While some have speculated that police may now be using real-time facial recognition with the assistance of bodycams, many believe that forces are more likely to be deploying after-the-fact facial recognition, where footage recorded at protests is used to identify and arrest protestors in the aftermath.

“Police departments in the US can use facial recognition at their own discretion. There are very few laws that reign in how, when, and why facial recognition can be used by law enforcement,” says Comparitech’s Bischhoff.

“That being said, I don’t think there’s widespread use of real-time face recognition at protests in the US yet. It’s more likely that police will collect images of looters and protesters from CCTV cameras and other images, then match those images against their mugshot databases after the protests have ended to assist in arrests.”

Given the levels of false positives that many of these technologies produce for people of colour, their use in anti-racism protests is a particularly bitter irony. It is highly likely that we will see people misidentified and falsely arrested as a result of the technology, from footage at protests against the very thing they help perpetuate.

“Not only are Black lives more subject to unwarranted, rights-violating surveillance, they are also more subject to false identification, giving the government new tools to target and misidentify individuals in connection with protest-related incidents,” wrote Buolamwini in an article on the issue entitled We Must Fight Face Surveillance to Protect Black Lives.

This isn’t an issue that is unique to the US, either. Police forces in many parts of Europe are beginning to deploy the technology, such as in the UK where London’s Met Police are using the technology, despite concerns surrounding its accuracy and bias.

And as the protests spread beyond the US, this same concern is arising.

Facial recognition needs urgent and significant regulation

While a number of cities in the US have now banned facial recognition outright, it is in generally woefully under-regulated.

“The US certainly needs more regulation around the use of facial recognition, especially when it comes to law enforcement,” says Comparitech’s Bischhoff.

“There are reports that the technology has also been used to identify protesters in the wake of the current turmoil. Ground-breaking though the technology is, without a cohesive regulatory underpinning it, we may find ourselves at some point down the line operating a system we can no longer control,” adds Keith Oliver, head of international at Peters & Peters LLP.

In Europe and the UK, meanwhile, laws governing privacy are currently doing much of the heavy lifting on facial recognition regulation, which some argue is enough to protect citizens.

“At the moment, facial recognition regulation is covered by privacy (of course with the now enlarged fines of GDPR) and human rights law,” says Elle Todd, data privacy legal expert and partner at Reed Smith.

“These laws are broad enough to cover the issues of facial recognition and are supplemented over time through guidance, codes and case law. There shouldn’t be a need for further regulation on this specific issue if regulators like the Information Commissioner’s Office are proactive in providing guidance, and keep up to date with current trends and use cases.”

Is a federal office the answer?

Is a federal office the answer?

However, in the US there is comparatively little regulation at a federal level, although Congress is currently exploring applying limits to facial recognition technology, and in particular how it can be used by law enforcement.

However, the Algorithmic Justice League has gone a step further, by proposing the establishment of a federal office similar to the US Food and Drug Administration (FDA), which would regulate facial recognition technologies.

The proposition is outlined in a white paper published at the end of May, penned by Buolamwini along with Erik Learned-Miller, Vicente Ordóñez and Jamie Morgenstern, which highlights how there are currently severe legislative gaps, and how the needs of facial recognition regulation are surprisingly similar to pharmaceuticals.

The paper notes that such a federal office would be challenging to create, but highlights that there is appetite for such an organisation among the technology industry.

“There is growing pressure for the regulation of technology companies, and even some evidence that the companies themselves would like some regulatory guidance, if for no other reason than to ensure a level playing field among the firms,” the white paper states.

However, others are sceptical that such an office could ever be established.

“From a UK and European perspective, the combination of our stringent data protection regime (notably the GDPR), and specific authorities in each country having powers to enforce that regime, already provides a framework – albeit one that covers all technologies and is not specifically focused on facial recognition,” says Todd.

“It would be highly unlikely we see something like in the US where, in contrast, the approach taken to regulation and enforcement tends to be through the adoption of a more specific issue or sector specific requirements at a national and/or at a state level.”

Facial recognition with serious racial issues is being used by police in protests against systematic racism in a clear example of the system being stacked against protesters.With so much at stake, and facial recognition bias pouring fuel on an already raging fire, it’s clear that something needs to be done if the technology isn’t to further exacerbate serious problems of racial inequality.

Read more: Secure messaging app Signal introduces face blur to protect protesters’ identities