Amazon Web Services (AWS) has brought Project Rainier, an AI compute cluster, online across several data centres in the US.

The deployment follows less than a year after AWS first disclosed its plans for the project, which aims to deliver significant AI training and inference capacity.

Access deeper industry intelligence

Experience unmatched clarity with a single platform that combines unique data, AI, and human expertise.

Project Rainier was developed in collaboration with Anthropic, an organisation focused on AI safety and research.

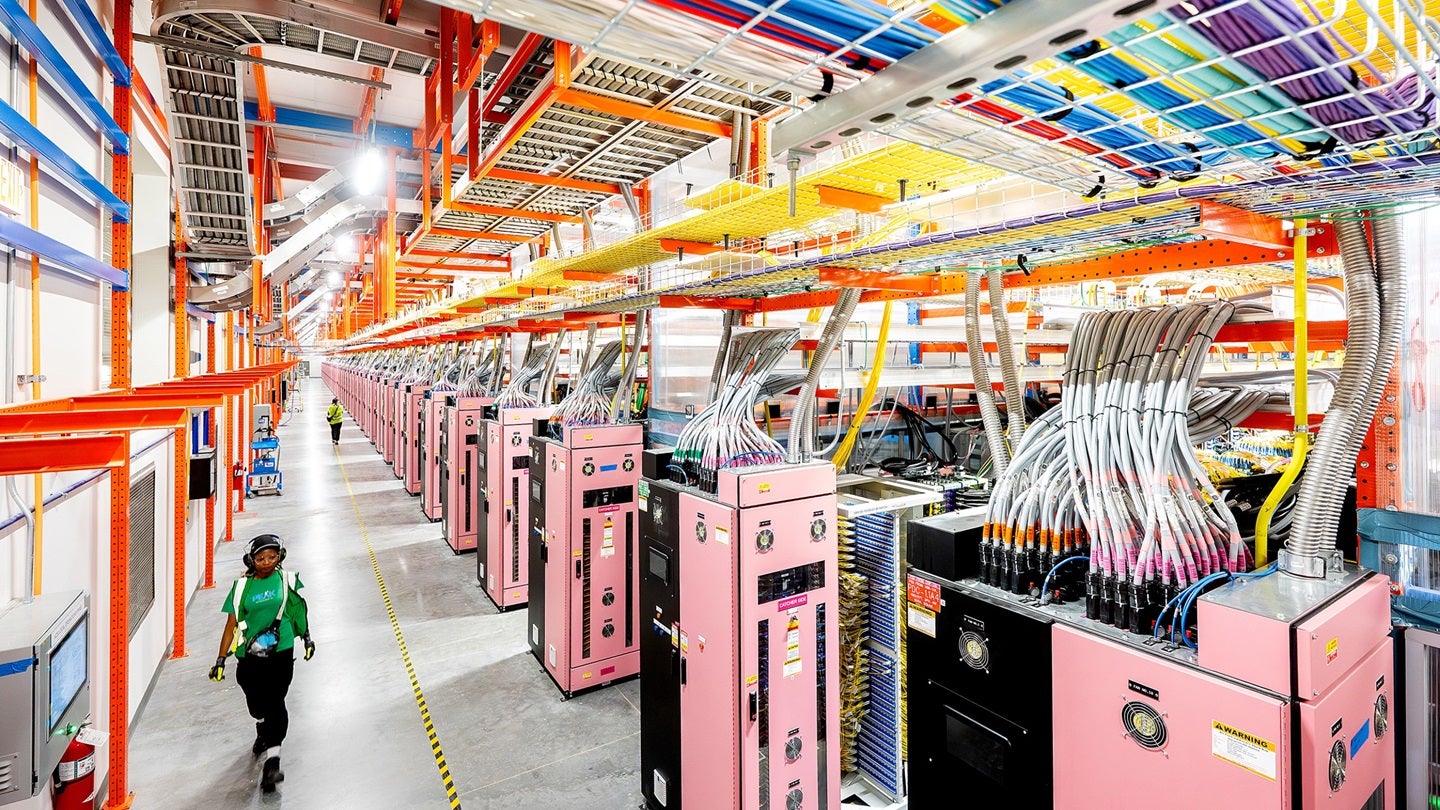

The cluster has been built around nearly 500,000 AWS Trainium2 chips, making it the largest Trainium2-based infrastructure assembled to date.

According to AWS, the compute resources delivered by Rainier provide more than five times the performance Anthropic used for earlier AI model development.

Anthropic is employing Project Rainier primarily for advancing its Claude AI model, with projections indicating the use of over one million Trainium2 chips for training and inference workloads by the end of this year.

US Tariffs are shifting - will you react or anticipate?

Don’t let policy changes catch you off guard. Stay proactive with real-time data and expert analysis.

By GlobalDataThe AI company is leveraging this infrastructure to support further iterations of Claude.

Technically, Project Rainier combines EC2 UltraClusters with Trainium2 UltraServers. Each UltraServer groups four physical servers with 16 Trainium2 chips each.

Internal communication within these clusters operates over NeuronLinks, which are high-speed connections designed to reduce latency and increase throughput.

Cross-cluster traffic uses Elastic Fabric Adapter networking, enabling low-latency, high-bandwidth data transfer between servers housed both within and across facilities.

The underlying Trainium2 chip architecture is purpose-built by AWS for large-scale machine learning workloads.

A single chip processes trillions of operations per second. With tens of thousands deployed in parallel, Project Rainier marks a 70% increase in AWS’s AI compute infrastructure compared to previous deployments, according to the company.

AWS manages all elements of the system stack internally, from chip design and server architecture to data centre layout and operational software.

This approach allows rapid iteration on hardware and software platforms, as well as targeted optimisations for power delivery and system reliability.

AWS distinguished engineer and Trainium head architect Ron Diamant said: “Project Rainier is one of AWS’s most ambitious undertakings to date.

“It’s a massive, one-of-its-kind infrastructure project that will usher in the next generation of artificial intelligence models.”

In terms of sustainability and resource management, AWS reported that all electricity consumed by its services in 2023 was matched with renewable energy resources.

The company continues to invest in large-scale renewable projects, nuclear power, and battery storage while maintaining a corporate target of net-zero carbon emissions by 2040.

Infrastructure upgrades specific to Project Rainier sites include data centre components intended to achieve up to 46% lower mechanical energy consumption and a 35% reduction in embodied carbon within construction materials such as concrete.

In a separate development, AWS plans to invest a minimum of $5bn in South Korea through 2031 to develop new AI data centres, according to the South Korean presidential office.

This commitment was announced during a meeting between AWS CEO Matt Garman and South Korean President Lee Jae Myung on the sidelines of the Asia-Pacific Economic Cooperation summit.

In September 2025, IBM and AWS expanded their partnership to accelerate secure cloud adoption and digital transformation in the Middle East. IBM Consulting will combine its AI, hybrid cloud, and industry expertise with AWS’s cloud infrastructure to support organisations in Saudi Arabia, the UAE, and nearby markets.