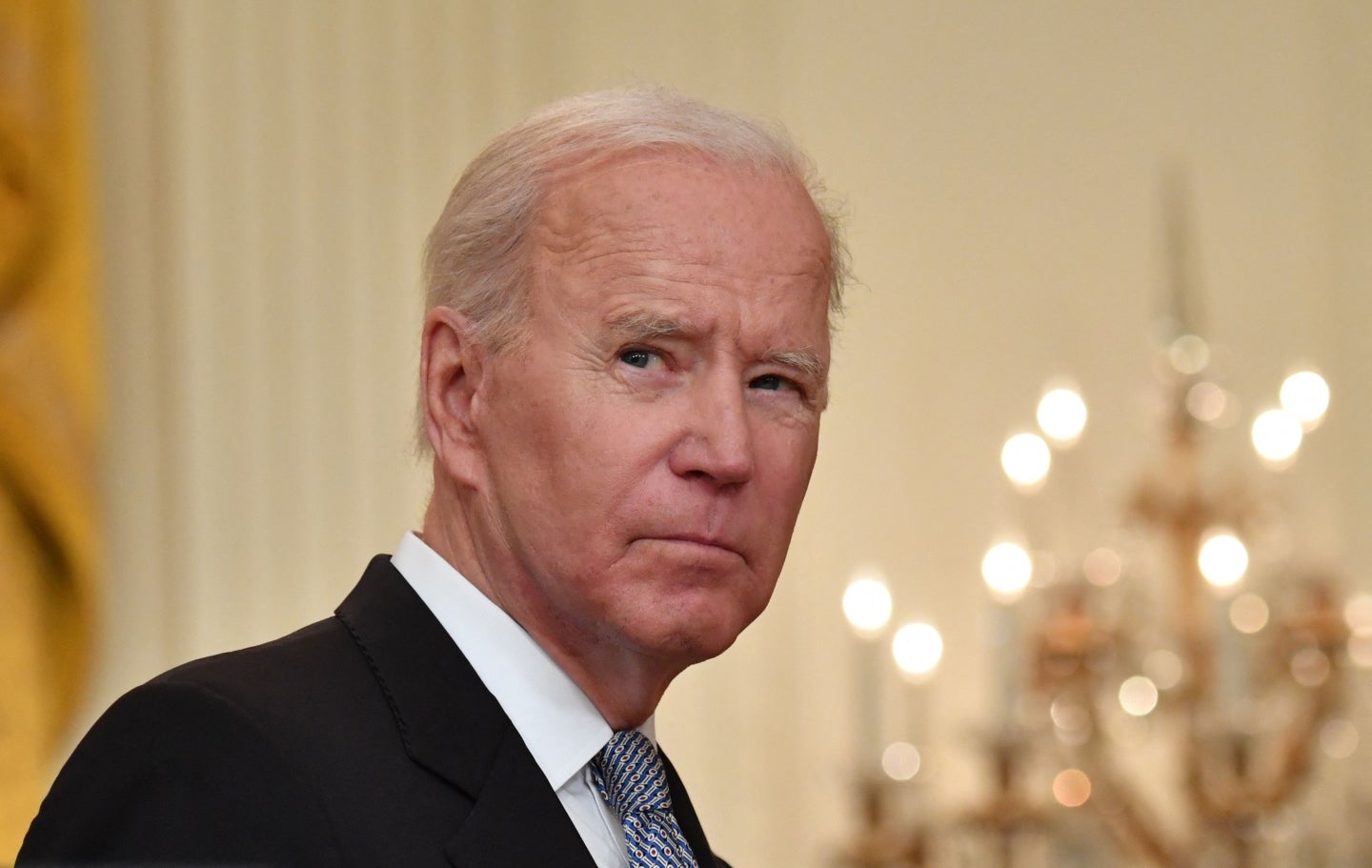

On the 30 October 2023, the Biden administration signed an executive order on the safe and secure development of AI. A month since this order has passed Verdict spoke to US tech insiders on what impact this order has had so far.

The order aimed to set out standards for tech companies developing AI, ensuring that the rights of consumers and workers behind AI were considered whilst promoting US innovation. The order explicitly expects tech companies developing AI tools to share safety test results with the US government directly.

Balancing regulation with innovation has been at the forefront of the AI debate.

Senior vice president at Orlando-based AI provider Kore.ai, Gopi Polavarapu, says that this struggle between innovation and regulation is not unique to AI.

“It’s really important that there is a balance between innovation and regulation to guard against potential future misuse and risks,” Polavarapu says, “as we’ve seen through history where the US government has regulated powerful technologies like nuclear fission, genetic engineering and seat belt requirements for cars.”

Polavarapu is optimistic that the order will foster consumer confidence in AI across sectors outside of technology. This is paramount to AI’s success in its adoption by businesses across sectors.

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalDataHowever, Polavarapu reinstates that the executive order remained an early step in securing AI safety. International co-operation and cross-border data agreements will continue to be in a near constant state of evolution in the near future. In the meantime, businesses developing or adopting AI will need to be flexible and could expect stricter regulations in the future rather than safety recommendations.

Nonetheless, opinion remains divided on just how the Biden administration’s executive order will promote tech innovation.

Light touch regulation for innovation

In Mountain View, California, Sudheesh Nair CEO of AI-powered business intelligence company ThoughtSpot questions its efficacy.

Nair states that whilst well intentioned, Biden’s AI order has added more red tape to the AI sector and created ambitious, stringent regulation that could stifle an otherwise burgeoning technology.

Clarity, he thinks, may instead be found in chaos.

“Although a lighter touch approach may unnerve some and it can be easier to throw stones, embracing the chaos can prove to be a strength down the line,” Nair says, “What will be key now is acknowledging the ongoing need to adapt regulation as the technology matures, delicately treading the fine line between government intervention and industry autonomy.”

In its thematic intelligence report into the tech regulation landscape, research analyst company GlobalData name AI ethics as one of the most important, and divisive, regulatory topics in 2023.

Historically, before the executive order had been signed, GlobalData found that the US had been lagging behind other regions like the UK and EU in terms of AI regulation, leaving Big Tech to self-regulate.

Governmental efforts to regulate AI that preceded the executive order, states vice president of software company Jamf, Michael Covington, largely focused on the AI technology itself.

“Biden’s executive order is significantly broader and takes a long-term societal perspective,” says Covington, “with considerations for security and privacy, as well as equity and civil rights, consumer protection, and labour market monitoring.”

The ripple effects of AI, in Covington’s view, have been brought into view by the executive order.

Covington states that governments have the advantage of being able to view wider society with a clearer, more accurate lens than tech companies. With a technology as disruptive to the labour market as AI, this wider perspective cannot be left behind.

However, he still insists that when it comes to deciding the reliability of AI tools the market remains in control. This, he believes, should not be silenced for fear of inaccuracies.

Beyond its first month, the executive order is likely to be the first of many regulatory announcements surrounding AI in the US and globally. With an abundance of AI safety announcements in the news, including the launch of the US’ own AI Safety Institute at the UK’s AI Safety Summit, it may be easy to harbour scepticism over the pragmatism of such initiatives.

Such doubts consolidated over the UK Safety Summit’s limited guestlist and closed-door conversations, but Oxford University Professor Ciaran Martin reminds us not to let perfectionism be the enemy of good effort.

“The alternative [AI Safety Summit] was not a better event – the alternative was nothing at all,” he states, “Going forward, we will need to broaden the conversation and make sure it’s not captured by the existing tech giants. But Bletchley was a good start and the [government] deserves credit for doing it.”

Whilst the Biden administration has made holistic efforts to guardrail AI, it will need to continue global collaboration to avoid a fragmentation of the AI market.