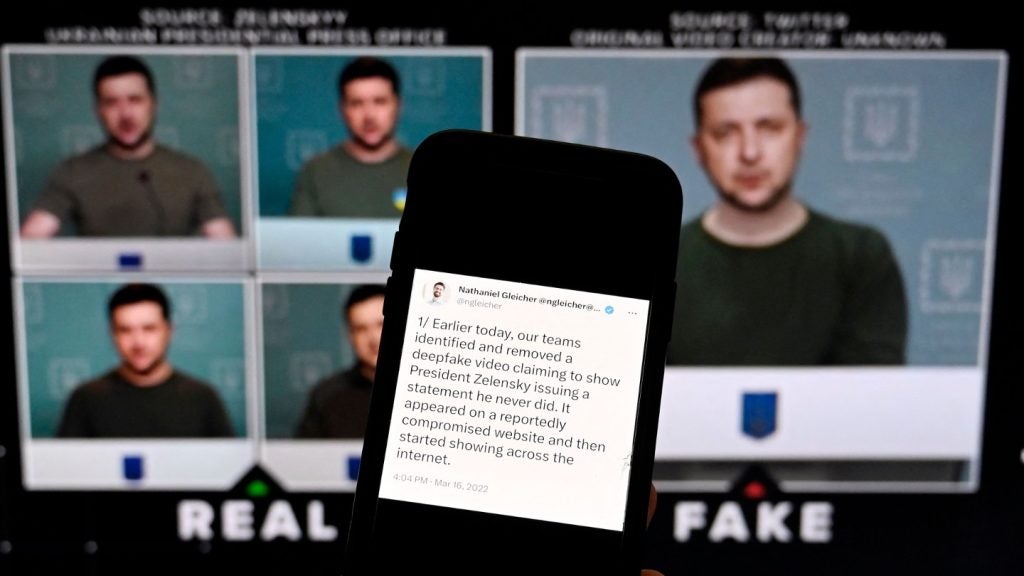

In the future of work, the use of brain-monitoring neurotechnology to watch and hire employees looks increasingly like a real possibility.

At a time of widespread interest in neurotechnology devices and applications, some guidance is needed to ensure the interests of employees, employers, and society are safeguarded.

Advances in neuroscience and AI will boost neurotechnology

Neurotechnology refers to any technology that helps understand how the brain functions. It can be used purely for research purposes, such as experimental brain imaging to gather information about mental illness or sleep patterns. It can also be used in practical applications to influence the brain or nervous system; for example, in therapeutic or rehabilitative contexts.

Neurotechnologies have been around for some time but dramatic improvements in the hardware and software, as well as the convergence of neuroscience and artificial intelligence, are poised to open a wide range of neurotech devices and applications, with an increasing amount of neurodata collected. According to the Harvard Business Review, the global market for neurotech is growing at a compound annual rate of 12% and is expected to reach $21bn by 2026.

Companies worldwide have started to integrate neural interfaces into watches, headphones, earbuds, hard hats, caps, and VR headsets for use in the workplace to monitor fatigue, track attention, boost productivity, enhance safety, decrease stress, and create a more responsive working environment. In the absence of guardrails and legislation on how to handle neurodata, the risks could be significant for employees and employers alike.

Employees have a right to mental privacy

The impact on our privacy will be massive in non-medical sectors such as workplaces. In the ICO’s first report on ‘neurodata’ titled Tech Futures: Neurotechnology, the UK data watchdog considers workplace monitoring as one possible application of neurotech. Examples include the use of helmets or safety equipment that measures the attention and focus of an employee in high-risk environments.

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalDataIn 2019, SmartCap launched LifeBand, a fatigue-tracking headband with embedded EEG sensors that gathers brain-wave data and process it through SmartCap’s LifeApp, which uses proprietary algorithms to assess wearers’ fatigue level on a scale from one (hyperalert) to five (involuntary sleep). When the system detects that a worker is becoming dangerously drowsy, it sends an early warning to both the employee and the manager.

Ensuring that a driver of a 40-ton truck is not falling asleep at the wheel is one application where it is difficult to argue that the driver’s right to mental privacy should be prioritized over public safety. But when it comes to deploying neurotechnology for employee hiring or measuring an employee’s productivity, the cost to employee privacy will be seen as less acceptable.

The ICO warnings come as companies such as Elon Musk’s Neuralink explore new use cases. Neuralink has recently received regulatory approval to conduct the first clinical trial of its implantable brain-computer interface. It is now worth $5bn though it is still a long way from being a commercial product.

Neurodata is involuntary and intrinsic

As observed in the ICO report, neurodata is subconsciously generated and people have no direct control over the specific information that is disclosed. There is no specific definition of neurodata as either a specific form of personal information or a special category of data under the General Data Protection Regulation (GDPR).

Neurotechnology can collect information that people are not aware of, including emotional states, workplace engagement, and medical information about mental health. When analysing emotions or complex behaviours, the risks become particularly high. Organizations may extensively use some large-scale neurodata without applying the additional safeguards for processing special category data.

Neurodata used to classify people emotionally and behaviourally (for purposes including employment) could embed biases and discriminate against certain neurodegenerative pathologies. The clock is ticking. Neurotechnology must be developed and used responsibly: in the future of work, employers must understand the unique dangers that this technology poses to earn the trust of their employees.