The UK’s intelligence agencies, including MI5 and MI6, are lobbying the UK government towards a lighter-touch approach to regulating artificial intelligence (AI), according to reports from The Guardian.

The companies currently lobbying are speculated to be GCHQ, MI5 and MI6.

Access deeper industry intelligence

Experience unmatched clarity with a single platform that combines unique data, AI, and human expertise.

This report comes shortly after the UK’s Home Office commissioned an independent review into amending the 2016 Investigatory Powers Act (IPA) which aimed to define how investigatory powers can interfere with privacy.

The report, carried out by House of Lords member David Anderson, stated its most substantial amendment proposal is a weakening of the data protection surrounding bulk personal data sets (BPDs) which could then be used to train AI.

Anderson writes in the investigation that the report is written under the assumption that the IPA will need a complete redevelopment in the 2030s in light of advancing AI technologies.

The current IPA was introduced after Edward Snowden’s 2013 leak that the National Security Agency was collecting phone records of thousands of Verizon customers for surveillance purposes.

US Tariffs are shifting - will you react or anticipate?

Don’t let policy changes catch you off guard. Stay proactive with real-time data and expert analysis.

By GlobalDataDescribing AI as one of the “most striking developments” in technology since the introduction of IPA, Anderson admits that AI lingers between promoting security and “[enabling]” threat actors. A balance that cannot, in his words, be “reliably predicted”.

Can AI be reliably used for security and surveillance?

The European Union’s AI Act aims to classify AI tools based on the level of risk they pose, factoring in biometric surveillance and potential algorithm biases.

Already, the Act has received criticism from Big Tech as a barrier to innovation regarding the strict rules that AI categorised as high-risk would require.

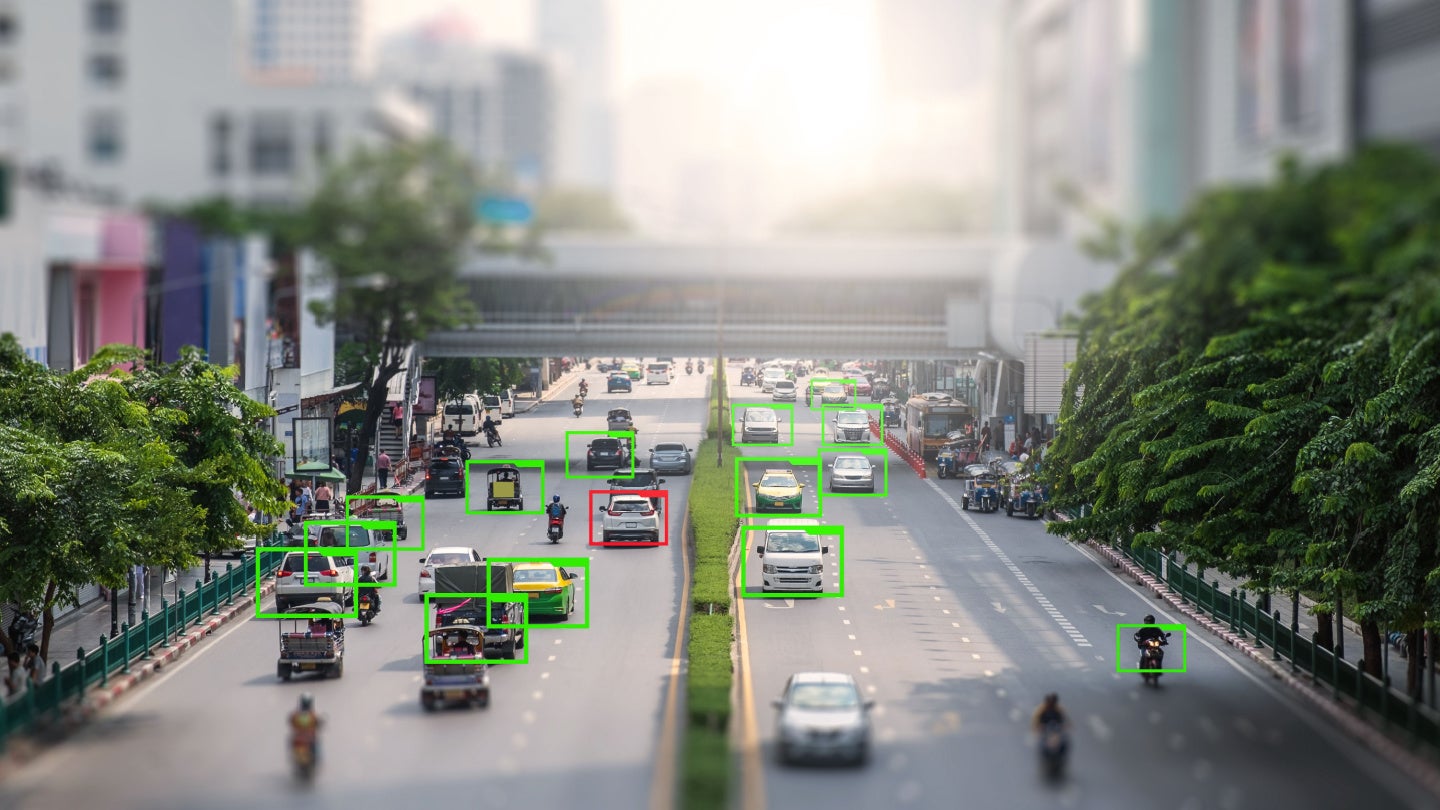

A 2023 GlobalData survey found that already 17% of businesses said they are using AI for asset monitoring and surveillance. But AI is also increasingly incorporated into domestic surveillance as well.

The US’ National Geospatial-Intelligence Agency have already introduced AI into their operations.

In conversation with NPR, the Agency’s Deputy Director of Data and Digital Innovation Directorate Mark Munsell stated very clearly that AI was not used “to spy on Americans”, but rather to speed up imagery analysis.

When asked on the use of AI within domestic surveillance, graduate analyst at GlobalData Benjamin Chin raised the serious ethical concerns that could arise.

Although Chin believes that AI is “well-suited to intelligence gathering and analysis” and proposed that AI could eventually perform these tasks more proficiently than humans, integrating AI into the “observe, orient, decide, act (OODA) loop” yields questions about how much control we relinquish into machines.

Whilst countries may wish to utilise AI in their intelligence agencies for fear of “falling behind”, Chin argues that there is still doubt if AI is ready for the task of surveillance.

The technology is still prone to bias and errors even when used in consumer electronics, which may hinder its reputation as a surveillance tool.

“Furthermore,” Chin explains, “many AI algorithms operate on a ‘black box’ model in which its decision-making process is not overtly clear, leading many to question whether we can trust a system we can’t explain.”

In its 2023 thematic intelligence report into AI, GlobalData explains that as AI is increasingly used in life-changing decisions, transparency and explainability will become essential qualities for AI developers to consider.