Nearly every aspect of the modern world is controlled by computers and humanity’s reliance on them is only set to increase in the years to come, possibly with the development of biocomputing.

The universal language that governs every PC, tablet, and phone is binary code, a two-number system that is used to represent data as a sequence of 1s and 0s. A binary digit, or bit, is the smallest informational unit in binary, where each bit is either a 1 or 0. Transistors act as switches and turn on or off depending on what electrical signals it receives. The on and off states reflect a 1 and 0, respectively.

Moore’s Law describes a doubling of the number of transistors on a chip every two years. In practicality, this could be seen as an algorithm running twice as fast on hardware from 2022, compared to hardware from 2020. First presented in 1965 by the late Gordon Moore, Moore’s Law has largely remained true ever since.

However, advances in semiconductor chip technology have seen modern transistors rapidly approaching the size of the silicon atom, the absolute limit of a silicon-based transistor. This has led many to speculate on the impending death of Moore’s Law.

What is biocomputing?

Extensive research is being conducted on parallel technologies in an effort to unlock a new era of computing, including artificial intelligence, neuromorphic semiconductors, and quantum computing. However, one field of research that remains relatively unknown is biocomputing.

Situated at the intersection of computing, engineering, and molecular biology, biocomputing aims to harness biological cells and their sub-components (such as DNA) as hardware to perform computational functions.

US Tariffs are shifting - will you react or anticipate?

Don’t let policy changes catch you off guard. Stay proactive with real-time data and expert analysis.

By GlobalDataBiocomputing and brain organoids

Brain organoids are a collection of neural cells that are artificially grown in vitro from cultured pluripotent stem cells. These neural cells interlink to create a three-dimensional structure that resembles an organic brain. Brain organoids are often used in medical research as a model of the human brain to further the understanding of neurodevelopment and neurodegenerative diseases.

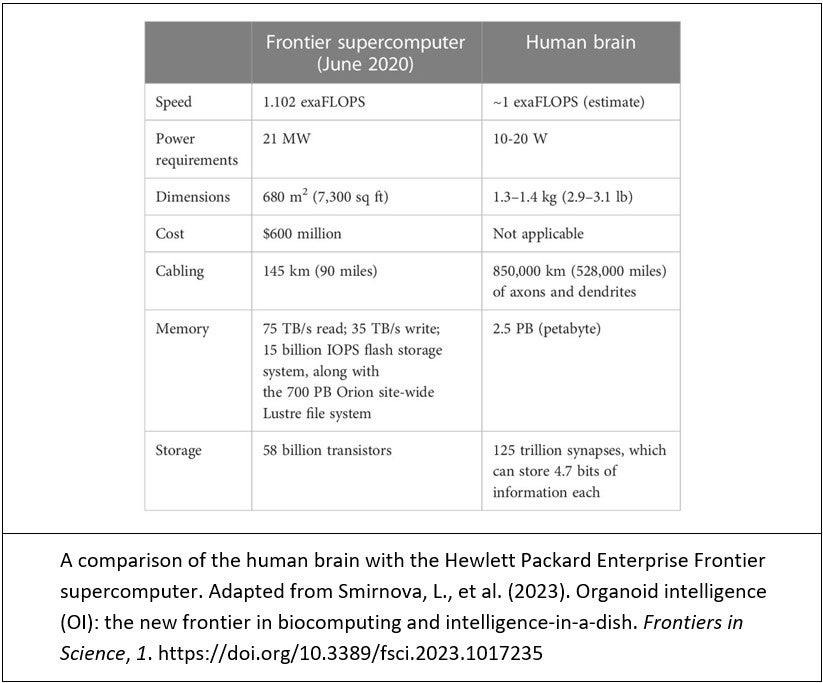

However, some are researching the potential of harnessing brain organoids as computers. Indeed, in February 2023 an international team of scientists published a paper on “Organoid Intelligence” in Frontiers in Science (See top of page).

The computing power of the human brain is immense, even when compared to the most powerful supercomputers. Such brain organoid computers would be super-efficient and be able to operate with a fraction of the energy consumption. Neurons operate in an analog rather than binary fashion, which may explain their superior power efficiency and potential. However, the very fact they are analog means little is truly known about how they work, let alone how to read or write code for them.

Intelligence in a dish

Much of the brain is yet to be understood by science and it will be decades before such ‘organoid intelligence’ is fully realized. Along with the relative immaturity of neuroscience comes a host of ethical concerns surrounding the use of human brain cells, and the criteria of consciousness.

It may be easy to dismiss arguments of consciousness in artificial intelligence by saying it is simply clever code. But when the computer is made of the same cells that make up us, the argument becomes much more complex.