Researchers from RMIT University in Melbourne, Australia have developed a set of algorithms they claim can master an Atari video game 10 times faster than current leader in the field, Google-owned AI firm DeepMind.

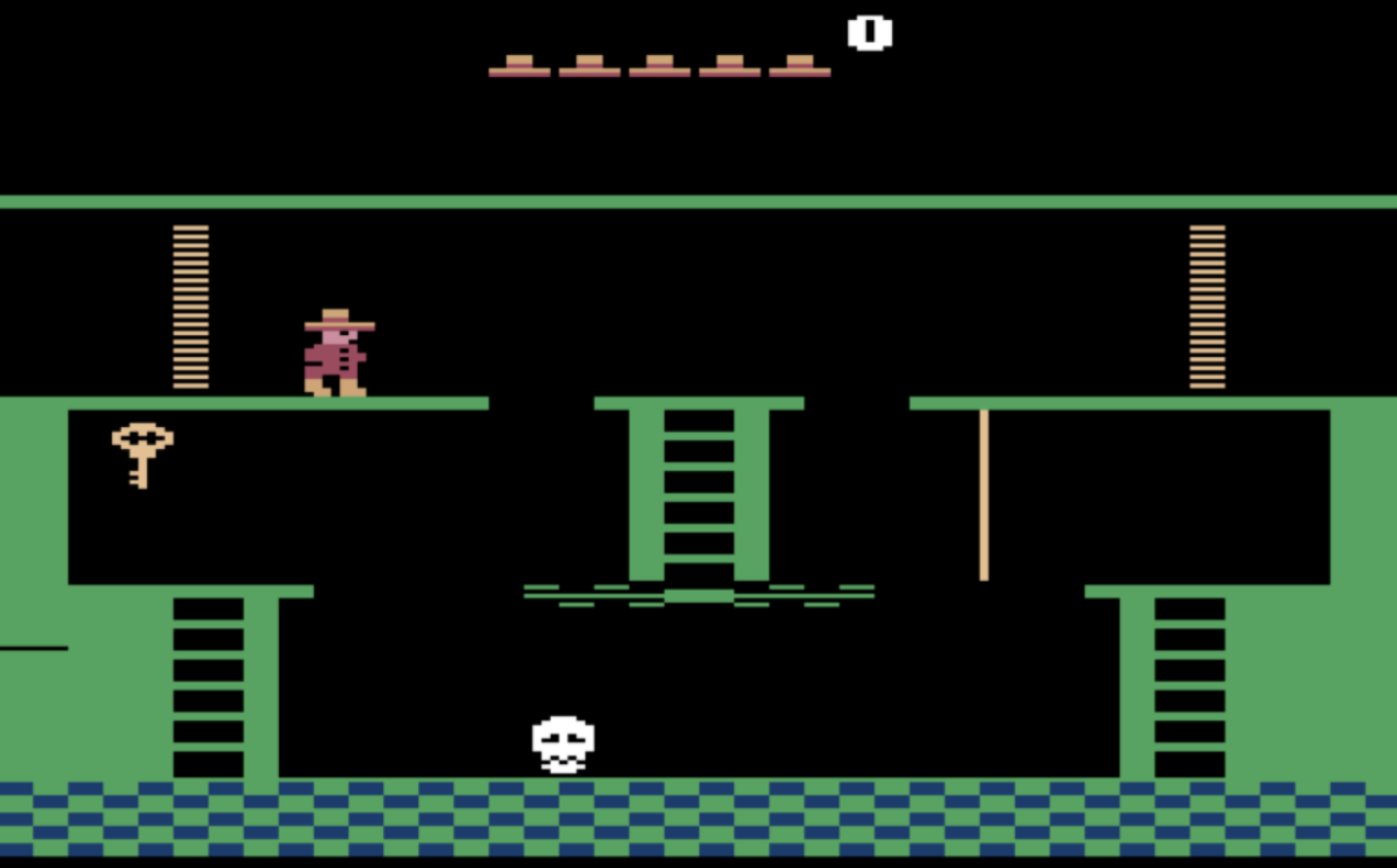

The new breed of algorithm can autonomously play Montezuma’s Revenge, a 1984 game that involves navigating traps and obstacles in a labyrinth, by learning from its mistakes and combining so-called ‘carrot-and-stick’ reinforcement learning with intrinsic motivation approach.

This means that the AI is rewarded for being curious and exploring its environment.

The algorithm was developed by associate professor Fabio Zambetta in collaboration with RMIT’s professor John Thangarajah and Michael Dann.

“Truly intelligent AI needs to be able to learn to complete tasks autonomously in ambiguous environments,” said Zambetta.

“We’ve shown that the right kind of algorithms can improve results using a smarter approach rather than purely brute forcing a problem end-to-end on very powerful computers.

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalData“Our results show how much closer we’re getting to autonomous AI and could be a key line of inquiry if we want to keep making substantial progress in this field.”

How will it help in the real world?

As with Google DeepMind’s game playing AI, the goal of developing such algorithms is to improve problem-solving in real-world situations.

According to Zambetta, their new system can be applied to a wide range of tasks outside of the video game world when supplied with raw visual inputs.

That could one day include self-driving cars or faster medical diagnosis.

“Creating an algorithm that can complete video games may sound trivial, but the fact we’ve designed one that can cope with ambiguity while choosing from an arbitrary number of possible actions is a critical advance,” he says.

“It means that, with time, this technology will be valuable to achieve goals in the real world, whether in self-driving cars or as useful robotic assistants with natural language recognition.”

Zambetta will unveil the new approach tomorrow at the 33rd AAAI Conference on Artificial Intelligence in the US.

DeepMind has dominated gaming AIs

In 2015 Google Deepmind’s AI famously learnt how to play 49 computer games, such as Video Pinball, to a human level and with minimal human input.

However, Montezuma’s Revenge proved too complex for the game at the time.

Since then, DeepMind’s next iteration AlphaGo taught itself to play the complex game of go, beating world champion Lee Sedol in 2016. And its more general program, AlphaZero, has also beaten the most powerful programs playing go, chess and shogi.

More recently, DeepMind’s program AlphaStar became the first AI to defeat a professional StarCraft player, a complex strategy video game.

And in November last year Uber’s AI, Go-Explore, also mastered Montezuma’s Revenge.

Read more: AI breakthrough from Google’s DeepMind could help prevent blindness