Digital home assistants like Google Home Mini and Amazon Echo owe users more than privacy: if they are to be trusted, they must explain how they make decisions.

Fortunately, regulations such as General Data Protection Regulation (GDPR) will begin asking such questions. The only problem is that artificial intelligence (AI) may not be able to provide any answers.

Not long after Google launched its new Google Home Mini smart speaker, it was discovered that the device was recording users’ audio even when the activation words (OK Google) weren’t even used.

Google was quick to lay blame for the eavesdropping fiasco on a so-called hardware bug – a sensitive touch mechanism allowing the unit to activate itself – rolling out a quick update that purportedly prevents devices from inadvertently recording and reporting on overheard conversations by deactivating the activation button.

From now on Google Home Mini will only record what you say after you capture its attention.

In other words, it was a simple case of user error and a misunderstanding of how one intelligent home device in particular works.

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalDataNo more button, no more problem, right? Not even close.

Consider the rapidly building tsunami of such devices – driven by AI from Google, Amazon, Apple, and Baidu. They come with price tags that make them suitable, not just for any home, but for multiple rooms within those homes.

People are extremely bullish on the AI capabilities these devices bring, according to a recent study by Verdict in partnership with GlobalData. It showed 80 percent of those surveyed consider AI to represent a reward rather than a risk.

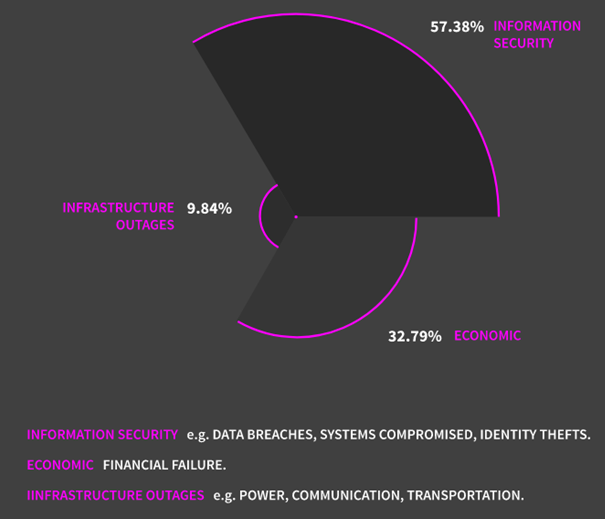

However, when asked “what do you see as the biggest risk of AI in the coming 5 to 10 years” almost 60 percent of those same users singled out information security as the single biggest threat looming on the horizon (you can view the full study here).

The Google Home Mini incident puts this concern into stark relief, calling for tighter information security practices for any device that collects data actively or (heaven forbid) passively.

But this is only the tip of the iceberg.

No one is talking about the bigger concern with these always-on devices that goes well beyond data sovereignty and eavesdropping.

There’s a more difficult question to answer here concerning the very AI algorithms driving these devices.

What do we know about the brains behind the digital assistant’s record button? What do we know about the AI algorithms responsible for the decisions made by Google Home Mini’s AI when it listens to our private conversations and accesses our private information?

How do they decide what to say, do and show? Can a user know that a recommendation or declaration is without bias and free from any agenda operating on behalf of the owner of that AI?

Anticipating these questions back in late 2016, Microsoft chief executive Satya Nadella proposed an ethical approach to AI that:

- Is designed to assist humanity;

- Transparent;

- Maximises efficiency without destroying human dignity;

- Provides intelligent privacy and accountability for the unexpected, and

- Is guarded against biases

These seem plausible in terms of their intent.

Interestingly, when the European Union puts GDPR into effect this coming year, Nadella’s call for transparency will be put to the test.

Under GDPR’s right to explanation clause, users will be able to ask Google, Amazon, and others for an explanation of the inner workings of those AI black boxes.

This is all well and good, but the real problem is one of visibility, or rather a lack thereof.

Users deserve to know how and why an AI algorithm decides when we need to leave in order to reach an appointment on time or how it determines the best arrangement of smooth jazz hits for our morning commute.

Unfortunately, the deep learning algorithms used by these devices appear as black boxes, opaque even to their creators, who simply set down goals like getting to work on time and add data – lots and lots of data.

These black boxes use many (many) layers of neural networks that run simulations against all available data in order to reach those goals; knowing how the algorithms get there isn’t a priority or even a possibility in any detailed sense for someone watching over the proceedings.

Consider Google’s AI learning project, Deep Brain, which in 2016 created its own encryption algorithm.

Not even Google’s own engineers who built this algorithm can reverse-engineer the how used by Deep Brain to come up with that new encryption scheme.

At best, therefore, smart digital assistants like Amazon Alexa, Google Assistant and Apple Siri can only explain the rules, goals and data at hand, nothing more.

Related Company Profiles

Google LLC

Microsoft Corp

Apple Inc

Amazon.com Inc

Baidu Inc