Social media companies are set to testify over child online sexual exploitation on their sites in January, according to the Senate Judiciary Committee.

The social media companies attending will be Meta, Discord, Snap, X and TikTok. They will be represented by their CEOs.

US Senator Dick Durbin posted on X that some social media companies originally “outright refused” to attend the hearings on 31 January 2024 and stated that the US Marshals Service attempted to serve Discord’s subpoena at its headquarters.

In research published by the Molly Rose Foundation, a charity set up in memory of UK teenager Molly Russell, found evidence of significant failings by leading social media companies to control the content on their sites.

Posts that promoted suicide or self-harm ideation were at risk of being algorithmically pushed to a wider audience of younger users.

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalDataAfter interacting with harmful posts on Meta-owned Instagram Reels, a rival to TikTok, the research suggested that up to 99% of the posts algorithmically recommended to the test profile used by the Foundation were considered harmful.

Meta has already been sued by over 30 US states this year alleging harmful platform design that is addictive to children and places them at risk.

In its 2023 thematic intelligence report into social media, research analyst company GlobalData state the growing scrutiny on social media companies to become more proactive over the safety of their users.

“Regulators worldwide are increasingly trying to hold social media companies responsible for the content on their platforms,” reads GlobalData’s report, “exacerbating these issues are algorithms highlighting extreme and unreliable content to boost engagement. Platforms have traditionally been free from intermediary liability, but their responses to addressing harmful content have been weak.”

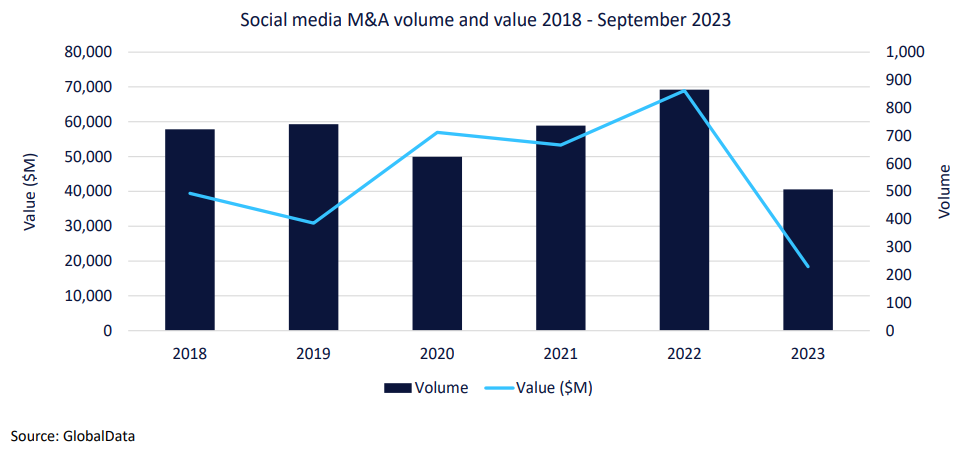

Social media companies are also facing a significant downturn in the value and number of M&A deals occurring within the business.

Whilst advancements in AI have helped boost some new investment into the industry, increased regulatory scrutiny and a growing responsibility over user health and data has signalled a decline in the industry.

To stay profitable, social media companies will now be looking to diversify into e-commerce and gaming according to GlobalData forecasts.

Our signals coverage is powered by GlobalData’s Thematic Engine, which tags millions of data items across six alternative datasets – patents, jobs, deals, company filings, social media mentions and news – to themes, sectors and companies. These signals enhance our predictive capabilities, helping us to identify the most disruptive threats across each of the sectors we cover and the companies best placed to succeed.