Currently being blamed for everything from Brexit to influencing the US elections, fake news is increasingly seen as a genuine danger to democracy. And as traditional journalism has beaten a retreat into more click baiting than fact-checking, it looks like we are genuinely in a lot of trouble when it comes to separating fact from Russian Troll Farm manipulation.

Technology is certainly a huge factor in the rise of fake news. It made publishing cheap and, as a result of the intense competition that bred, made traditional sources less visible. However, while the root of the problem, technology may also step in as potential help here – marking a much more hopeful way forward for us all potentially.

In a recent TED talk, for example, legendary investigative journalist Christiane Amanpour said what we need is what she calls “moral technology”, a way of aiding us all to keep an eye on the truth of a report. Well, Christine, we may already have an answer for you.

The fake news lessons from the biggest data scoop so far

Graph databases – which first came to prominence with the Google algorithm many years ago – are a key weapon in the fight for a more moral online new technology that Amanpour and other concerned observers want. Uniquely able to navigate relationships in data, graph technology has impeccable investigative journalist credibility already, as it was one of the key enabling technologies used in the Panama and Paradise Papers.

And graph technology is now powering probes into Russian fake news. During the 2016 US presidential elections Twitter was likely used to propagate fake news to influence the Presidential election, and the House Intelligence committee has released a list of 2,752 false Twitter accounts believed to be operated by a known Russian troll factory, the Internet Research Agency.

Even though these accounts and tweets were immediately removed from Twitter.com and the Twitter API, journalists at US broadcaster NBC News were still able to assemble a subset of them – and using a graph database were able to analyse the data.

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalDataThe result was a story about how this form of fake news might have affected the 2016 US Presidential election. Two days later, Mueller named the Internet Research Agency and several of the Twitter accounts and hashtags used in the NBC News data specifically.

Detecting fake news

The reporters open sourced the data in the hopes that others could learn from this dataset and inspire those who have been caching tweets to contribute to a combined database. But while most news articles explain what happened, the important question is how did it happen in order to prevent future abuse?

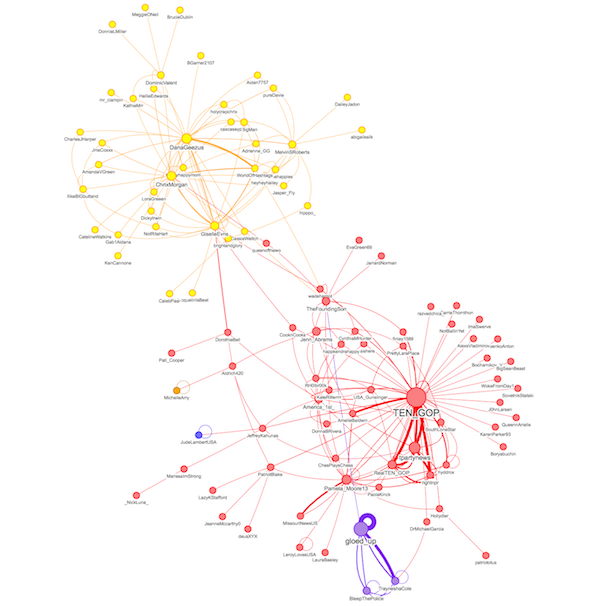

NBC found that the key to detecting fake news is spotting those hidden connections between accounts, posts, flags and websites. By visualising these non-obvious connections from within a graph database, reporters, but importantly also citizens and voters, can understand the patterns that belie fake content.

Many accounts were intended to appear as normal everyday Americans. These accounts had fake profile information and often tried to associate themselves with real-world events and – now that the Mueller indictment has also revealed many of these operatives traveled to the US – it is possible they actually participated in some.

Another type of Russian troll account posed as local news outlets. In this case @OnlineCleveland – another of the Russian-controlled accounts – appeared to be a local news outlet in Cleveland. These accounts often posted exaggerated reports of violence. The third type of Russian troll account appeared to be affiliated with local political parties, organising rallies and meetups.

Inside the Russian troll network

Most of the original tweets discovered in the Russian troll network were written by a small number of users, and the majority of overall tweets were retweets, because many of the Russian troll accounts were solely retweeting other accounts in an attempt to amplify the message.

When we then look into the hashtags these Russian trolls were using, we can see that one group was tweeting mainly about right-wing politics (#VoterFraud, #TrumpTrain), another group was more Left leaning, but not necessarily positively (#ObamasWishlist, #RejectedDebateTopics), and the third group covered topics in the Black Lives Matter community (#BlackLivesMatter, #Racism, #BLM).

Each of these three clusters tended to have a small number of original content generators, with the bulk of the community retweeting to amplify the message. For example, one account sent more than 3,200 original tweets, averaging about 7 tweets per day. On the other hand, others authored only 21 original tweets out of more than 9,000 sent.

Preventing future fake news

So, what can social media platforms and governments do to monitor and prevent future abuse? The verdict’s clear – it’s all about us being enabled to spot these kinds of connections. It’s difficult to identify these relationships in a dataset if you’re not using a technology purpose-built for displaying connected data.

It’s even more difficult if you’re not looking for connections in the first place. Here let’s introduce graph technology, which was architected specifically to do just this kind of analysis and navigation of data.

To uncover foul play, it’s then essential to detect and understand the patterns of behaviour reflected by those connections. In this case, a simple graph algorithm (PageRank) was able to illustrate that most of the Russian troll accounts behaved like single-minded bees with a focused job and not like normal humans.

So graph visualisation highlights the complex connections among social media data elements – and just as with detecting the secret financial affairs of the 1% in the Panama and Paradise Papers, the key to detecting and then fighting back against fake news has to be in spotting the connections that reveal the manipulation.

Technology may be the reason we all face this modern day scourge. Surely, the good news is that it may also offer a way to tackle it.