The metaverse company Somnium Space is introducing a feature by which users can arrange to have their avatar persist in the metaverse after they (the user) shuffle off their mortal coil.

It is not the first time that users happily ambling through the latest technological novelty have unexpectedly found themselves on the edge of a philosophical crevasse. More practically, it is another headache for regulators.

How it the avatar works

Upon losing his father to cancer, Somnium Space’s CEO and founder, Artur Sychov, was saddened to reflect that his then-infant children would have no memories of their grandfather. This sadness inspired the ‘Live Forever’ mode.

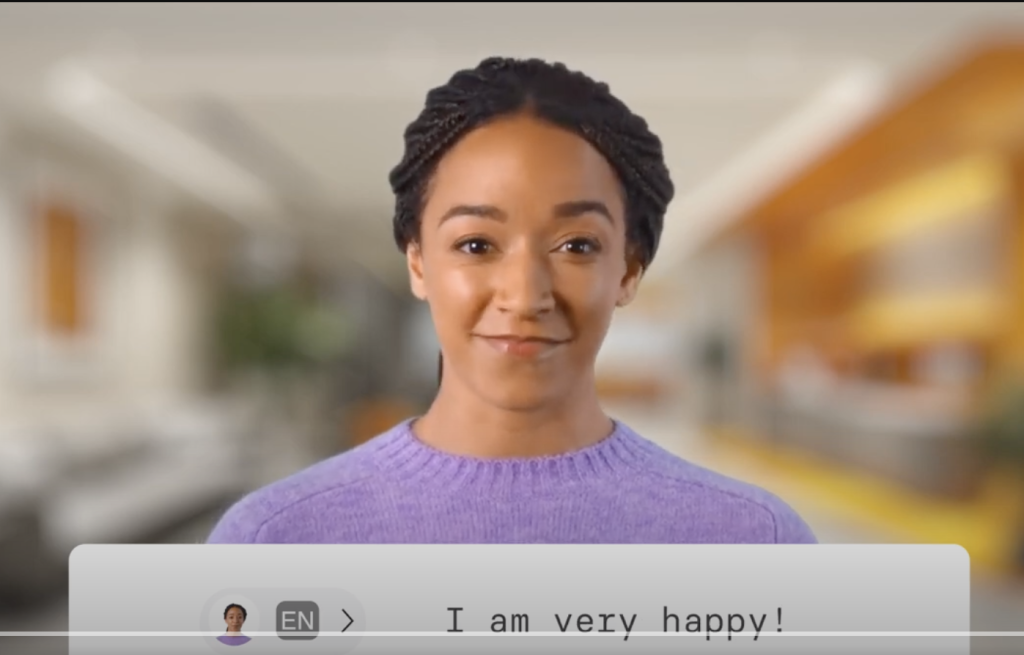

Through their surviving avatar, the ‘Live Forever’ mode will attempt to replicate the way a user behaved when alive, with information from stored data (including their messages and movements). Of course, technology is as yet insufficient to convincingly recreate a full human from such material, but it likely will become so in the future. Even the suggestion of Somnium Space’s new mode is enough to make people stop and think about the possibilities.

Technological necromancy

Humans employing external tools to relive memories of the dead is nothing new, but Somnium Space may differ. Letters have been used for centuries to leave something behind—photos for decades. The elderly are increasingly recording video messages for descendants to view, and companies offering to record and produce interviews with family members have succeeded. Some will say Somnium Space’s suggestion is simply the next progression: a change in degree, not in kind. But those who disagree will assert that a line is crossed when we try to ‘animate’ the dead. In a recorded video message, the deceased will only say things they were actually filmed saying. In Somnium Space, their avatars will say things that the program thinks they would have said, but which they did not actually say.

We have seen related questions raised before. Viewers were shocked to see how humanlike Google’s Duplex voice assistant sounded when it debuted in 2018. Google showed Duplex calling a hair salon and booking an appointment, with the hairstylist receiving the call unaware that they were not talking to a human. Some questioned whether the hairstylist had been treated unethically.

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalDataRegulation will inevitably struggle to keep up

The practical problem with technologies that seek to or can ‘appear’ human is regulatory.

Senator Orrin Hatch asking Mark Zuckerberg how Facebook made money if users did not pay is a recent example of a troubling issue with the tech regulatory system; regulators infamously struggle to understand the technology they are supposed to regulate.

Regulation is usually a matter of catching up to whatever innovation Big Tech has made and is very rarely a matter of anticipation. When the moral consequences of a single aberration are grave enough, this is unacceptable.