A recent GlobalData survey of more than 1,700 senior executives found that 50% had a more positive view of AI than a year ago. More than three-quarters of respondents said AI helped their company survive the Covid-19 pandemic, and 43% confirmed that they would be accelerating AI investment over the next 12 months, supporting the theory that business sentiment towards AI is increasingly positive.

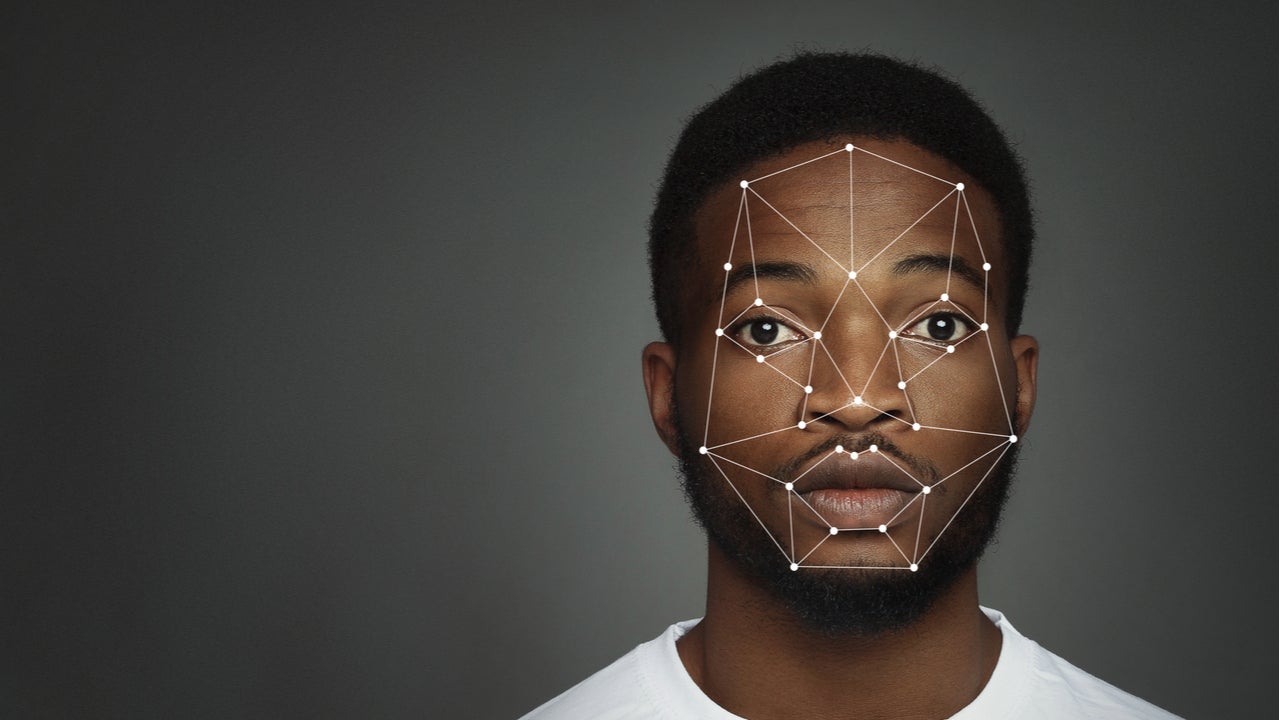

However, as AI’s transformative potential has become clear, so, too, have the risks posed by unsafe or unethical AI systems. Recent controversies around facial recognition, automated decision-making, and Covid-related tracking have shown that embedding trust in AI is a necessity.

Biased human perception is a problem

Various events have shown that AI-powered algorithms can be biased, particularly against women and people of color. In 2018, Amazon’s AI recruiting tool, which was trained to follow historical hiring trends across the tech industry, was found to be biased against female candidates. In January 2019, an MIT report suggested that facial recognition software is less accurate in identifying people with darker skin pigmentation. Similarly, another study in late 2019 from the National Institute of Standards and Technology (NIST) found a sign of racial bias in nearly 200 facial recognition algorithms.

AI is a mirror image of human intelligence, beliefs, and perceptions. An AI system can perform correctly but still be biased if its underlying algorithms are created based on a data set that perpetuates racial and gender biases. The problems with AI often aren’t apparent in the lab. Instead, the actual consequences are often only realized when implemented in a real-world scenario. While creating an AI and focusing on its operational excellence, we must also prioritize the ethical aspect of AI and restructure it on an academic level to consider the complexity of human behaviour.

Big Tech companies also need to address problems in their ethical AI practices. In recent months, Google has restructured its ethical AI team, accountable for creating responsible AI, and questionably terminated two co-leaders (Timnit Gebru was fired in December 2020, and Margaret Mitchell in February 2021). Gebru was removed for saying that Google’s language processing models were inefficiently trained. Mitchell, in turn, defended Gebru publicly and was reportedly fired for using an automated script to look through Gebru’s emails to find evidence of discrimination. As both leaders were women, and one is black, their sudden termination raised questions over racial and gender diversity at Google.

Instilling trust in AI

In 2020, tech leaders such as Amazon, IBM, and Microsoft announced that they would no longer sell facial recognition (FR) tools to law enforcement or in general. Various regulatory authorities, including the World Economic Forum (WEF), also took action. In January 2021, the WEF formed the Global AI Action Alliance (GAIA), a standard-setting group for responsible AI development and deployment worldwide. The group contains over 100 companies, governments, civil society organizations, and academic institutions to accelerate AI adoption in the global public interest.

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalDataInstilling trust in AI means avoiding any bias in the underlying data set based on which AI tools are trained. There is an increased need to focus on unbiased synthetic data training and federated learning. Along with all these reactive measures, proactive actions are necessary.

First, before implementation, algorithms should be scrutinized for any trace of bias. This can be done through simulation testing techniques and comparative analysis by running algorithms alongside the human decision-making process.

Second, in critical decision-making, AI should be used to help human decision-makers instead of performing autonomously.

Third, organizations should adopt an incremental improvement approach to their AI systems and follow responsible operational practices. By following these steps, organizations can proactively address the issue of biased AI.

Related Company Profiles

Microsoft Corp

Amazon.com Inc

Google LLC