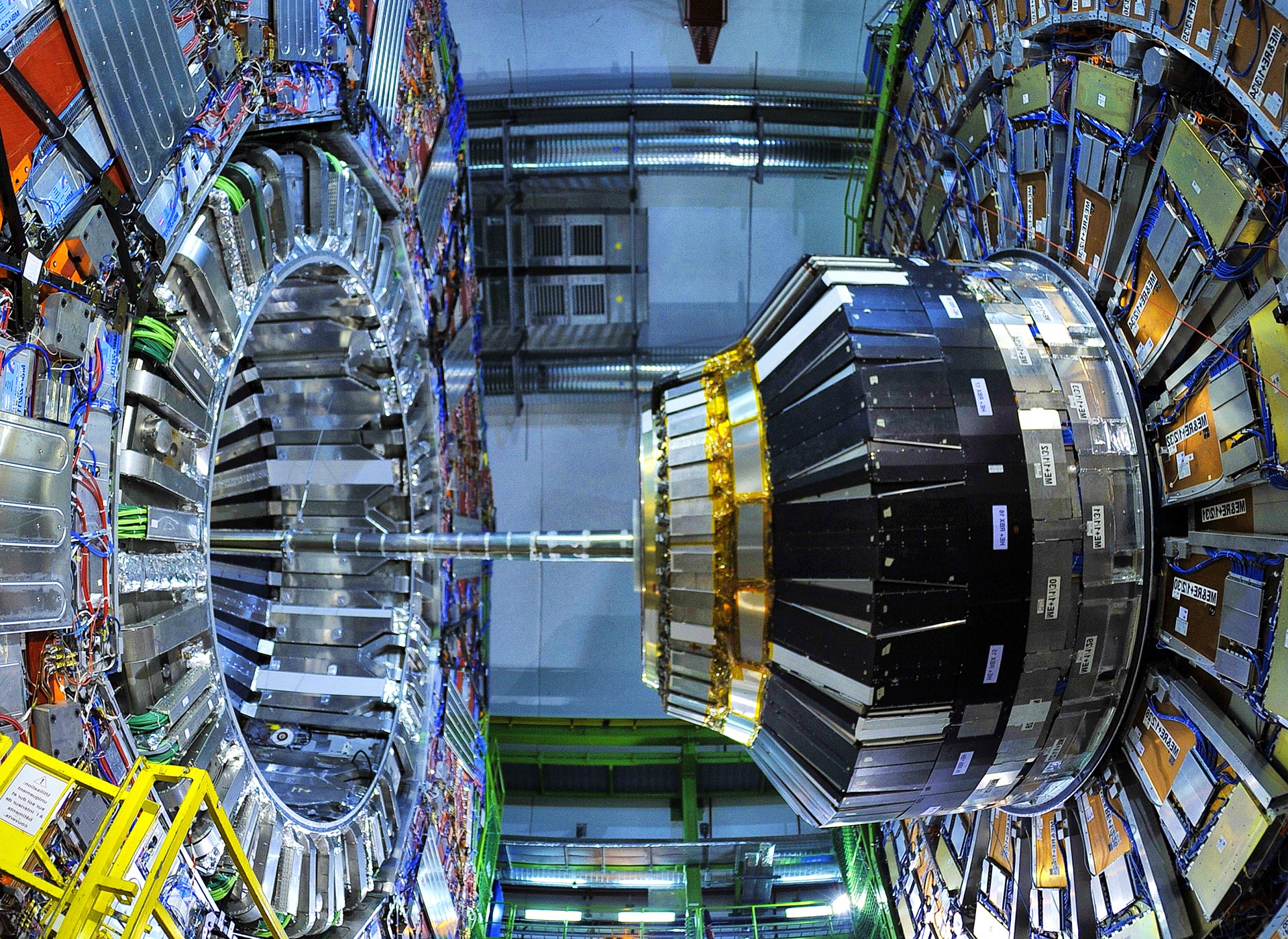

Located 175m beneath the ground near Geneva, Switzerland, The Large Hadron Collider (LHC) is the biggest particle collider in the world.

Physicists believe that the LHC could play an important role in solving unanswered questions about the laws of physics. However, for this to occur, the practicalities of storing the volume of data required must be addressed.

Verdict heard from the European Organization for Nuclear Research (CERN) on the importance of networks in enabling cutting-edge discoveries.

“After electricity, networking is the most important thing at CERN”

Inside the LHC, which is made up of a ring of superconductors, particles travelling almost at the speed of light collide with each other. Scientists can observe up to 1.7 billion proton-proton collisions per second, producing a data volume of more than 7.5 terabytes per second, which means that the capacity of CERN’s data centres must be prepared for large volumes of data.

Although the underlying science behind these experiments may be complex, CERN faces the same challenges that other organisations face when it comes to processing, storing and managing data.

Only some of these collisions observed within the LHC lead to new discoveries, therefore this colossal volume of data must be filtered in such a way so it is more manageable. With this volume of data, Tony Cass, Communications Systems group leader in the CERN IT department explains just how important networking is:

How well do you really know your competitors?

Access the most comprehensive Company Profiles on the market, powered by GlobalData. Save hours of research. Gain competitive edge.

Thank you!

Your download email will arrive shortly

Not ready to buy yet? Download a free sample

We are confident about the unique quality of our Company Profiles. However, we want you to make the most beneficial decision for your business, so we offer a free sample that you can download by submitting the below form

By GlobalData“After electricity, networking is the most important thing at CERN. Physicists obviously need it to move the data from the experiments to the Data Centre where it is processed and then outside to our partners around the world. Then we have 2,500 staff who need it for all our administrative applications, to access the databases and we have to serve 13,000 users who regularly use it for email, for the web, for everything. If electricity does not work and the network does not work, then CERN does not work.

“Internal and external network communications are essential for CERN. State-of-the-art network equipment and over 50000 km of optical fibre provide the infrastructure that is crucial for running the computing facilities and services of the laboratory as well as for transferring the colossal amount of LHC data to and from the Data Centre.”

CERN and Juniper Networks, a US-based company that develops networking products, have collaborated to improve its network capacity. CERN has deployed Juniper Networks QFX Series switches, EX Series Ethernet Switches and leverages Tungsten Fabric to meet its “extreme computing” needs, not just for those on-site, but also the 13000 physicists around the world who receive almost real-time access to the data.

“CERN needs to store a deluge of research data”

As part of the partnership, Juniper supports the operations and experiments at the LHC, as well as CERN’s data center. This has enabled researchers around the world to receive data from the LHC experiments for analysis. In the last 12 months, 370 petabytes of data have moved across the network.

Cass explains that the CERN Data Centre runs over two million tasks every day, and that the storage of research data is a necessity:

“CERN needs to store a deluge of research data. Collisions in the LHC generate particles that decay in complex ways into even more particles. Electronic circuits record the passage of each particle through a detector as a series of electronic signals, and send the data to the CERN Data Centre for digital reconstruction. The digitised summary is recorded as a ‘collision event’. During LHC operation, nearly 1 billion particle collisions take place every second inside the LHC experiments’ detectors. A ‘trigger’ system selects events that are potentially interesting for further analysis, reducing the petabytes-per-second stream to a more manageable 2-300MB/s.”

Cass explains that even once the number of events that must be analysed is greatly reduced, the volume of data is still large:

“Even after this drastic data reduction, the LHC experiments produce about 90 petabytes of data per year, and an additional 25 petabytes of data are produced per year for data from other (non-LHC) experiments at CERN.

“Archiving the vast quantities of data is an essential function at CERN. At the beginning of 2019, 330 PB (330 million gigabytes) of data were permanently archived in the CERN Data Centre, an equivalent of over 2000 years of non-stop HD video recording.”

As with any organisation, a robust infrastructure that is also simple to deploy is essential for CERN and as it embarks on its next round of LHC experiments. A fast and reliable network is of high importance so that data can be transported from where it is generated to where it is processed and analysed.

According to Juniper, “the LHC experiments’ trigger and data acquisition systems handle data filtering, collection and infrastructure monitoring, and Juniper’s switching portfolio provides high-throughput connectivity to support the data collection and infrastructure monitoring”.

Automation is critical to the network

Unsurprisingly, given the level of processing required, Automation plays a role in the configuration and management of 400 routers and switches across CERN’s centre. Stefan Stancu, network and software engineer in the IT department at CERN said:

“Automation is critical to configure, deploy, and manage a very large-scale, multivendor network. “All our network is provisioned automatically. A service technician can change a switch, and our software tools then fully provision its configuration in a single command. With hundreds of routers and thousands of switches, manual configuration would be prone to human errors. Furthermore, we automatically update the contents of all routers’ access control lists on a regular basis, and we rely on Juniper’s advanced configuration interface to detect changes and apply the updates only when necessary.”

Cass explains that automation is central to managing the network:

“If I have to give just one word, it has to be automation. Things have to work simply and easily to be scalable at that level. Everything we do is controlled from a central database. All of the automation follows from that. I think Juniper fits well into that because it does not focus on proprietary solutions, it focuses on standard interfaces. And that is what enables our small engineering team to support many different vendors.”

Looking to the future

Not only does CERN’s future research have the potential to improve human understanding of what makes up our universe, but will mean that the network will have to meet new challenges. Vincent Ducret, network engineer in the CERN IT department said:

“CERN needed to increase the capacity of its data centres, including servers, storage, and networking, to fuel the next wave of discovery. The network needed to be faster and more redundant. The network also had to be programmable, enabling automated configuration and management, and adapt easily to changing requirements.

“The goal wasn’t only more capacity. We needed the network path to be more dynamic. We wanted the ability to use technologies like VXLAN for greater flexibility in connecting servers over the network.

“Juniper was selected as a supplier in this context and in view of preparing the network for the LHC Run 3 and also for the High Luminosity LHC to be commissioned in 2024. The latter will increase the precision of the ATLAS and CMS detectors. The requirements for data and computing will grow dramatically, with rates expected to be around 500 petabytes per year.”

Read More: The future of autonomous enterprises with Extreme Networks.